- [Blog](https://clickhouse.com/blog)

- [Engineering](https://clickhouse.com/blog?category=engineering)

Jimmy Aguilar, Elmi Ahmadov, and Robert Schulze

Aug 18, 2025 -25 minutes read

> **TL;DR**

>

> **We’ve completely rebuilt full-text search in ClickHouse**, faster, leaner, and fully native to the columnar database design.

>

> **This is a deep technical dive by the engineers who built it**, covering the new design from inverted indexes to query-execution tricks that skip reading the text column entirely. If you care about high-performance search without leaving your database, or love seeing database internals stripped bare, this one’s for you.

Full-text search (FTS) isn’t new to ClickHouse, but until now it ran on an older implementation with limits in performance and flexibility. We’ve gone back to the drawing board and re-engineered it from scratch, making search faster, more space-efficient, and deeply integrated with ClickHouse’s columnar design.

> You can register for the private preview of the rebuilt full-text search by following [this link](https://clickhouse.com/cloud/inverted-index-waitlist)

This is your **under-the-hood tour**, showing the core data structures — inverted indexes, finite state transducers, posting lists — and how the query pipeline was redesigned to cut I/O and speed up searches.

If you’ve ever needed to:

- run search-like analytics directly inside ClickHouse,

- avoid maintaining a separate search engine, or

- understand how modern text indexing works in a column store,

…you’ll get both the **why** and the **how**, backed by real examples and measurable performance gains.

Let’s start by looking at how the new index stores and organizes text, the foundation for everything that follows.

Imagine you’re storing product reviews, log messages, or user comments in ClickHouse. Each row contains a natural language document, i.e. a text written by human or a machine that follows a natural language grammar (no random IDs, no hash values).

Because ClickHouse is a **column-oriented database**, we store these documents one after another in a column of type [String](https://clickhouse.com/docs/en/sql-reference/data-types/string/). Each row holds a single document, which we can later search.

To make the content searchable, we split every document into a series of **tokens**, typically words. For example, the sentence:

`All cats like mice`

…would be tokenized as:

`[All, cats, like, mice]`

The default tokenizer splits strings by spaces or punctuation. More advanced tokenizers can split log messages into their components, for example, timestamp, severity, and message fields, or even extract **n-grams** for fuzzy search.

A **full-text search** then retrieves documents that contain a specific token. For example, to find the rows which mention `cat`, we might run:

```sql

SELECT [...] FROM [...] WHERE documents LIKE '% cat %'

```

(Notice the spaces — without them, we might accidentally match “edu cat e” or “multipli cat ion.”)

Full-text search can be implemented in two ways:

- **Full scans** – scan every row (slow)

- **Inverted indexes** – map each token to its containing rows (fast)

Inverted indexes make it possible to search massive datasets in milliseconds, and that’s exactly what ClickHouse now supports natively, with scale and performance in mind.

Before we dive into the details of how it works, let’s unpack the name “inverted index”, a simple idea that flips the usual document → terms mapping on its head, but can seem counter-intuitive if you’ve never worked with search engines before.

### Why is it called an inverted index?

When you read multiple documents from beginning to end, you can remember for each document the (unique) terms it contains. This `document → terms` mapping is sometimes called forward index.

An **inverted index** search flips that around: It stores a **term → documents mapping**. Given a term, it allows you to find all documents that contain it.

It’s like the back of a book: instead of reading pages to find a word, you look up the word to see which pages it’s on. That reverse lookup is what makes search engines fast, and it’s built into ClickHouse.

Now that we know what an inverted index is, it’s worth looking at where ClickHouse started. ClickHouse has always had ways to speed up text lookups, but these were approximations. To see why a true inverted index was needed, let’s trace the evolution from our earlier Bloom-filter-based approach to the new design.

## From bloom filters to real indexes

Before introducing native text indexes, ClickHouse already supported full-text search using the "bloom\_filter" "tokenbf\_v1" and "ngrambf\_v1" indexes. These are based on Bloom filters, a probabilistic data structure for checking whether the indexed documents contain a value.

Compared to inverted indexes, Bloom filters come with a few key limitations:

- **Hard to tune:** Using a Bloom filter requires manual tuning of the byte size, the number of hash functions, and an understanding how they affect the false positive rate. This often requires deep expertise.

- **False positives:** Bloom filters may answer that a value *might* exist even when it doesn’t. This means that additional rows have to be scanned, reducing efficiency of the search.

- **Limited functionality:** Bloom filter indexes only support a small subset of expressions in the WHERE clause, while inverted indexes are, in principle, more versatile.

Inverted indexes solve all three of these issues, and that’s why they now power full-text search in ClickHouse.

## How the new text index works

To understand how the new engine achieves its speed and precision, we first need to look at how the index is organized on disk and how queries interact with its building blocks.

### Index structure: dictionaries + posting lists

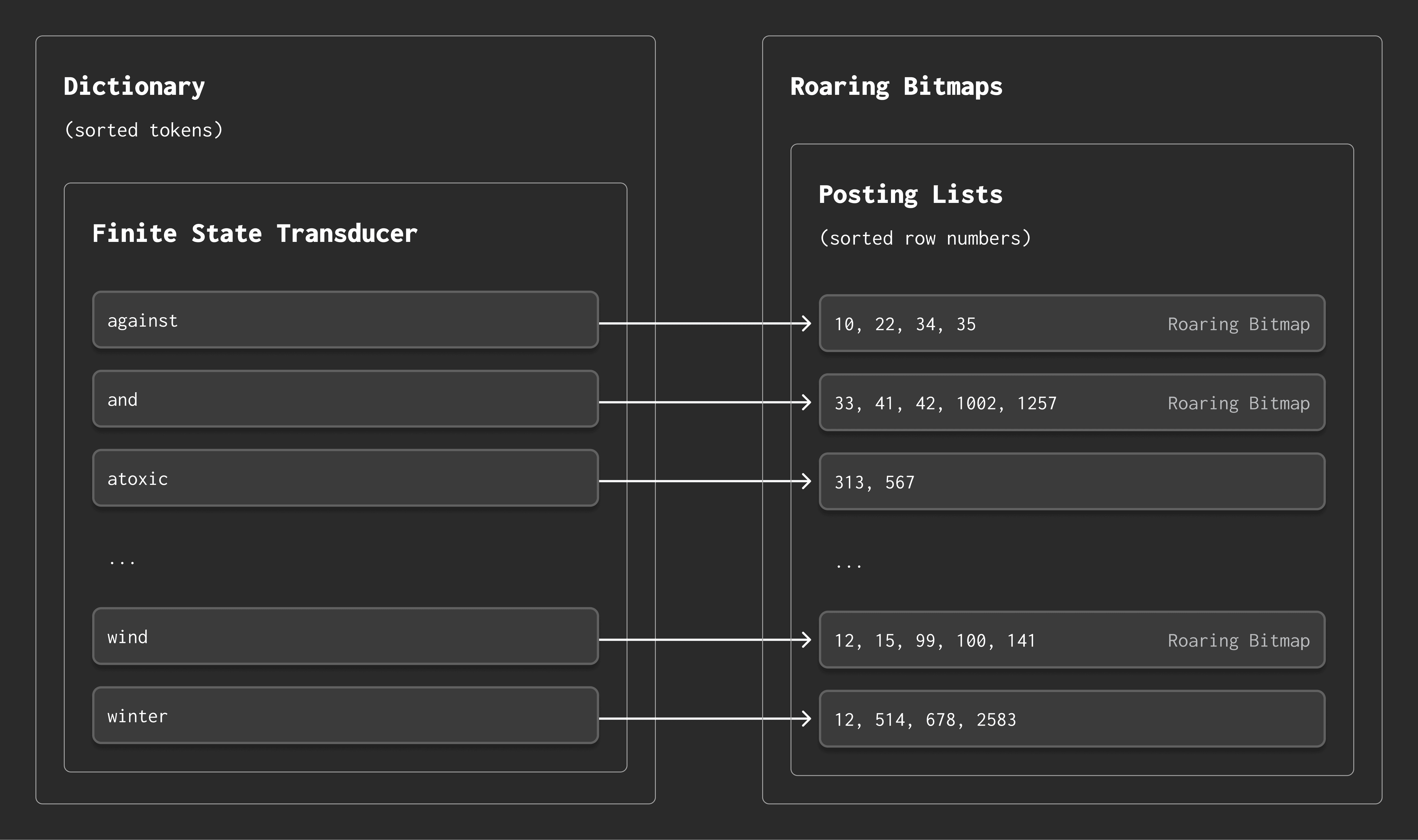

ClickHouse’s full-text search is powered by a **native inverted index**, also known as a *text index*. Conceptually, this index consists of two key components:

1. **A dictionary**, storing all unique tokens across all documents.

2. **Posting lists**, which record the row numbers of documents that contain each token.

Here’s how it works:

- When you search for a token, ClickHouse looks it up in the dictionary.

- If it is found, the dictionary returns the location of the corresponding posting list.

- The **posting list** is simply a list of row numbers — i.e., the documents — that contain that token.

Example:

- The token `wind` might appear in documents 12, 15, 99, 100, and 141.

- The token `winter` might appear in documents 12, 514, 678, and 2583.

This index design makes token lookups fast and efficient — even at massive scale.

Below is a simplified diagram of this structure:

Under the hood, ClickHouse stores and compresses both the dictionary and the posting lists using advanced data structures, which we’ll explore next.

### FSTs: Space-efficient dictionaries

To find a token quickly, we need an efficient dictionary structure.

The most basic approach for this is a sorted list of (token → posting list) pairs. This lets us do a fast binary search to find a token. Assuming that the documents are written in natural language, this opens up another useful optimization opportunity: **prefix sharing**.

For example, many tokens start with the same prefix:

- " win d", " win ter"

- " click ", " click house"

A plain list of tokens doesn’t take advantage of this - but a **Finite State Transducer (FST)** does.

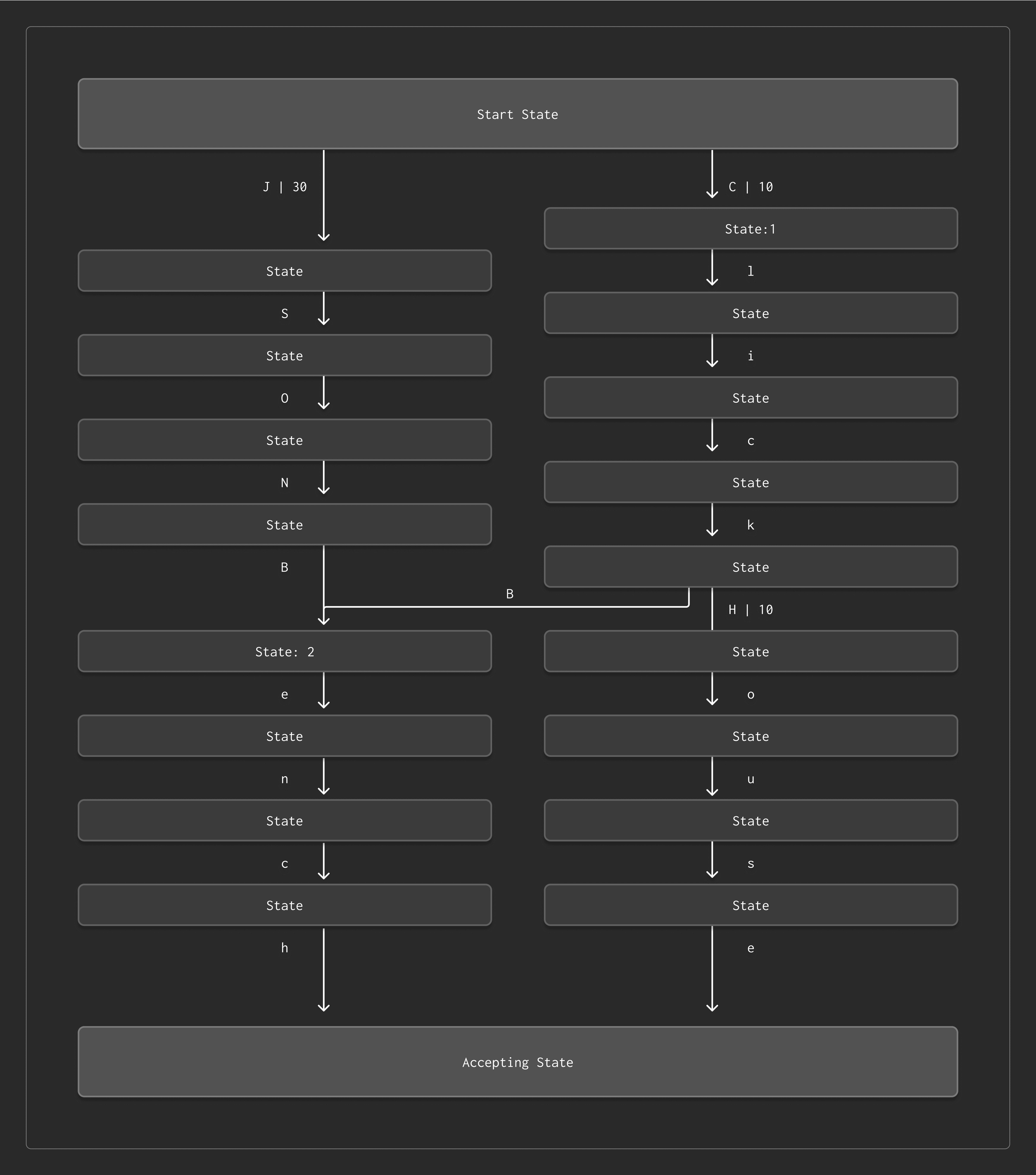

#### What is an FST?

An FST is a compact automaton—essentially a graph of characters—that encodes the dictionary in a highly compressed manner. It was originally designed to translate strings from one language to another, but it is also a great fit for text indexing. Systems like [Lucene](https://burntsushi.net/transducers/) and ClickHouse use FSTs to represent sorted dictionaries.

Instead of storing each token as a string, an FST:

- Represents shared prefixes and suffixes only once

- Encodes each token as a path through a graph

- Emits an *output* (e.g. the address of a posting list) when a path reaches a final “accepting” state.

This makes the dictionary extremely compact, especially when tokens share common parts.

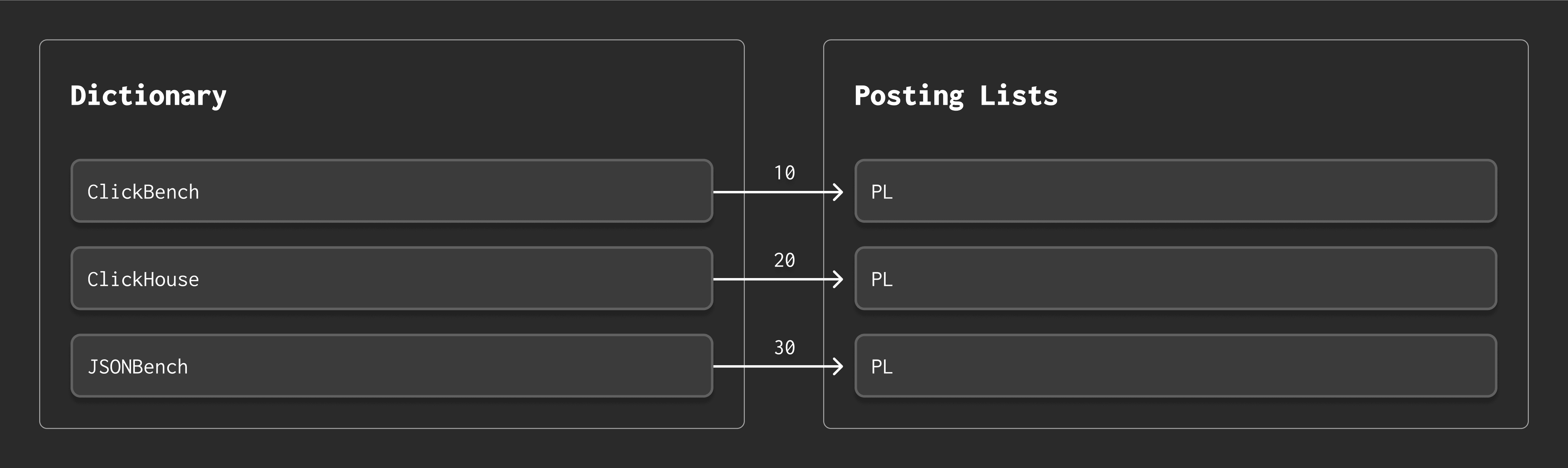

#### Mapping tokens to posting lists

The FST doesn’t store the posting lists themselves — just how to find them. For that, we associate each token with a **posting list offset**: the byte position where it begins in a separate file.

To retrieve the offset for a token, we walk the FST from the start state to an accepting state. Each transition may emit an integer, and we sum them all to get the final offset. This sounds a bit magical, but it’s how the standard FST construction algorithm works ([ref](https://link.springer.com/chapter/10.1007/3-540-44674-5_18)).

#### Example

Suppose we have three tokens:

`ClickBench`, `ClickHouse`, `JSONBench`

We’ll build the FST for the following small dictionary and see how the FST looks visually.

The FST encodes the tokens as transitions between characters. Shared prefixes like "Click" reuse the same path. At the end of each token, the FST emits its corresponding offset (10, 20, 30):

So the FST serves two roles:

- It lets us **quickly check** whether a token exists.

- It lets us **compute the byte offset** for that token’s posting list.

Together, this allows ClickHouse to store large token dictionaries in a compact and searchable manner.

### Roaring bitmaps: fast set operations

Each **posting list** stores the row numbers of documents that contain a given token.

We want to store these row numbers in a way that’s:

- **Compact** – to save space.

- **Efficient** – so we can quickly compute intersections and unions between posting lists.

Posting list intersections and unions matter when queries involve **multiple tokens** and combine them with logical operators, e.g.:

```sql

LIKE '% cat %' AND LIKE '% dog %'

```

#### Why Compression Matters

The traditional approach for compressing posting lists is to combine:

- **Delta encoding** – to calculate the differences between neighboring values.

- **Golomb-Rice encoding** – to compress the deltas efficiently.

This scheme offers excellent compression for typical natural language documents (a few frequent tokens and many rare ones). However, it processes data **bit by bit**, which isn’t ideal for modern CPUs that benefit from pipelining and SIMD instructions.

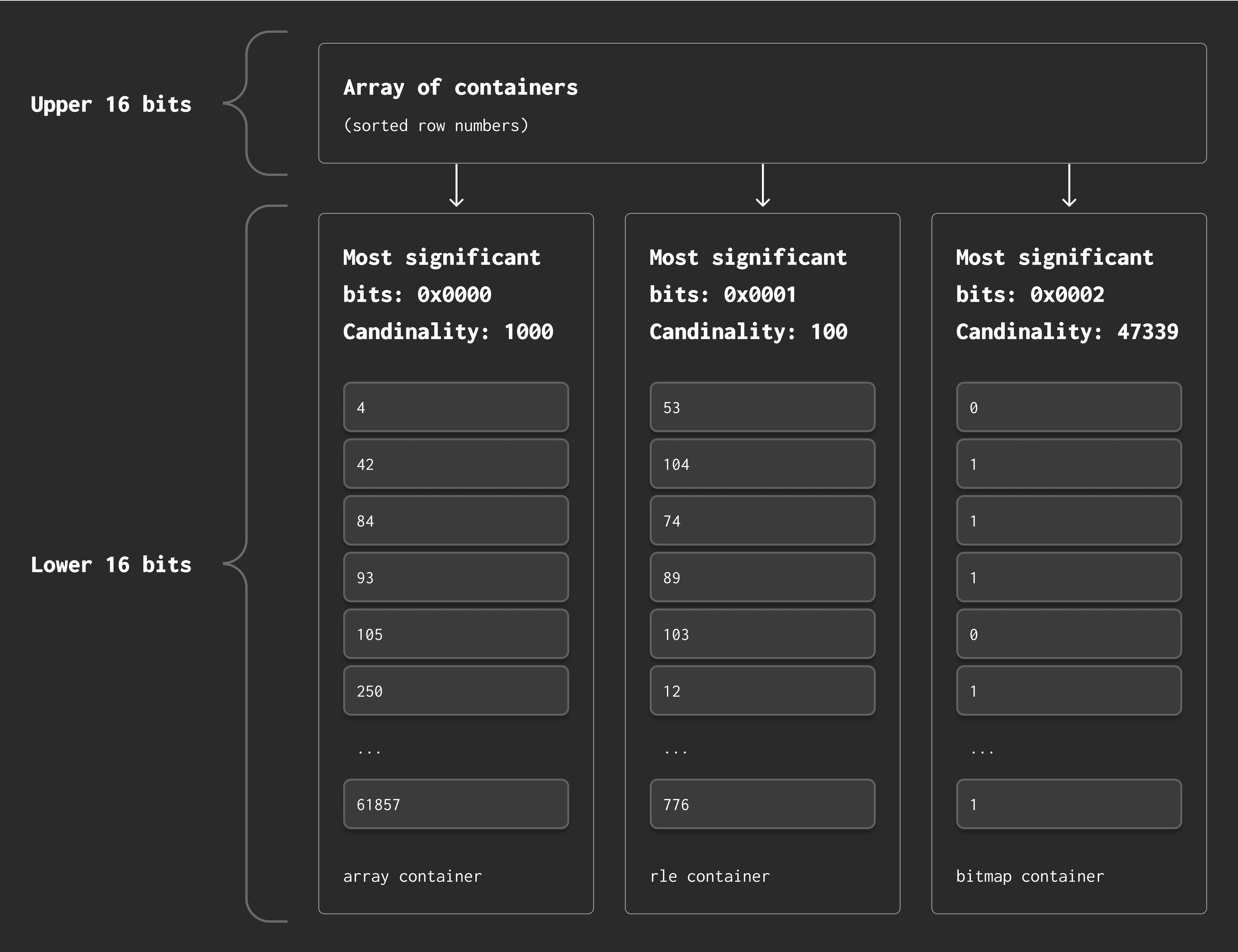

#### Enter roaring bitmaps

To keep things fast, ClickHouse uses [Roaring bitmaps](https://roaringbitmap.org/), a modern, high-performance format for storing large sets of integers.

The idea is simple:

- Think of a posting list as a **bitmap**, e.g., `[3, 5, 8]` → `000101001`

- Divide the bitmap into **chunks** of 65,536 values (2¹⁶).

- Store each chunk in a specialized **container**, based on its content:

- **Array** – for sparse data (few values)

- **Bitmap** – for dense data

- **Run-length encoding** – for long consecutive sequences

This design keeps storage compact and enables extremely fast set operations — including all combinations of AND, OR, and NOT between posting lists. Roaring bitmaps also use specialized SIMD-accelerated code paths for maximum speed.

#### Visual breakdown

Roaring bitmaps split each 32-bit row number into two 16-bit parts:

- The **upper 16 bits** select the container.

- The **lower 16 bits** are stored inside the container.

For example:

- Row number: `124586`

- Binary: `00000000 00000001 11100110 11101010`

- Upper 16 bits: `00000000 00000001` → selects container

- Lower 16 bits: `11100110 11101010` → value stored in container

Each container holds values for a specific range and is stored using the most space-efficient format. This makes it possible to scan, merge, and filter billions of row numbers with blazing speed.

We now have the two core building blocks of ClickHouse’s text index:

- FSTs for compact, prefix-sharing token dictionaries

- Roaring bitmaps for fast, compressed posting lists

We’ve now covered the two core structures — the dictionary and the posting lists — and how they’re stored efficiently. Let’s put these pieces together and see how the index is organized on disk.

## Storage layout: segments, granules, and files

Let’s put all the pieces together.

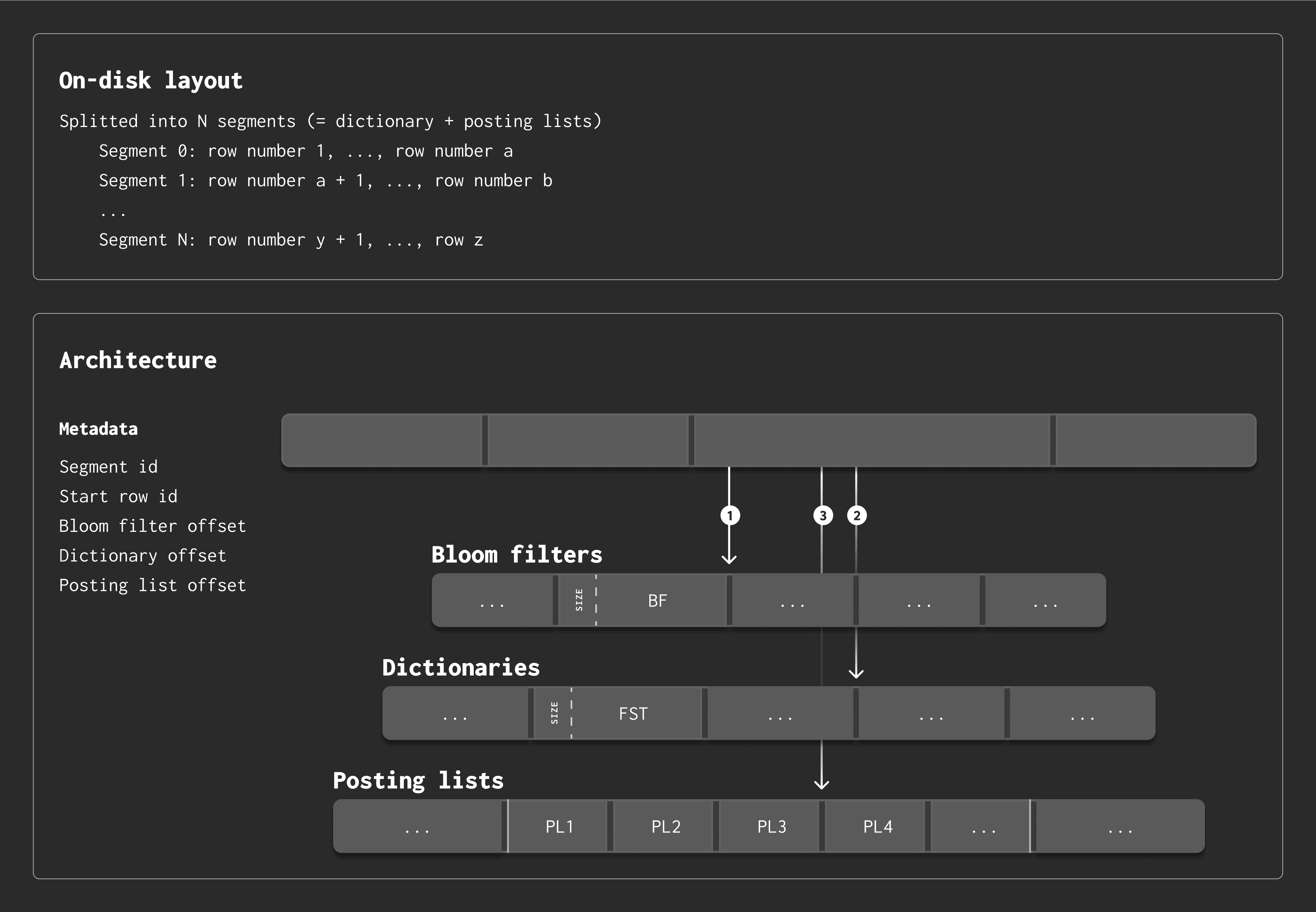

An inverted index consists of five files on disk:

1. An **ID** file — stores the segment ID and version. (see below what "segment" means)

2. A **metadata** file — tracks all the segments.

3. A **bloom filter** file — stores bloom filters to avoid loading dictionary and posting list files.

4. A **dictionary** file — stores all segment dictionaries as FSTs.

5. A **posting list** file — stores all posting lists as compressed sequences of integers.

*See the next diagram for an overview of how these pieces are laid out on disk.*

#### How the Data is Organized

The index is built per [part](https://clickhouse.com/docs/parts) and recreated during [merges](https://clickhouse.com/docs/merges). Internally, it’s split into **segments**, each of which contains:

- A dictionary (as an FST)

- Posting lists (as roaring bitmaps), one per token

The metadata file keeps track of:

- The segment ID

- The starting row ID

- Offsets into the Bloom filter file, dictionary file, and posting list file

In the diagram above, arrows marked **①**, **②**, and **③** represent the order in which files are loaded during lookups.

We shape the file sizes to match their content:

- **Bloom filters** are the smallest,

- **Dictionaries** are mid-sized,

- **Posting lists** are the largest.

#### What’s a Segment? And What’s an Index Granule?

To understand segments, we need to introduce **index [granules](https://clickhouse.com/docs/guides/best-practices/sparse-primary-indexes#data-is-organized-into-granules-for-parallel-data-processing)**.

- An **index granule** is the unit of indexing in ClickHouse (typically 8192 rows).

- A **segment** is a substructure within the granule, created to make indexing more memory-efficient.

Segments are split by dictionary size, not by row count. This makes sure that each segment holds FST dictionaries of roughly the same size. This approach avoids out-of-memory errors during index creation on large datasets.

Each segment is self-contained and holds:

- A row ID range

- An FST dictionary

- A set of posting lists

Smaller segments reduce memory pressure, but may lead to higher storage usage due to redundant storage of tokens in multiple segment FST dictionaries. Segmenting is off by default but can be enabled for advanced workloads.

#### What happens during an index lookup?

Here’s how ClickHouse finds rows for a search token:

1. **Traverse all dictionaries**:

The token is searched in every segment’s FST. When the FST reaches its accepting state, we know the token exists in that segment.

1. **Sum up FST output values**:

These values give us the offset into the posting list file.

1. **Load the posting list**:

- If it’s a cold run, the posting list is read from disk.

- If it’s a hot run, it’s already cached in memory.

*Note: The row IDs stored in posting lists are **relative** to the segment’s starting row. They’re extrapolated to full granule row numbers during lookup. (More on that in direct index usage optimization below.)*

With the fundamentals in place, let’s look at the major improvements we’ve made to the text index in this release, covering everything from API design to memory footprint and smarter granularity.

Over the past three months, we’ve made significant improvements to full-text search in ClickHouse, from user-facing changes to deep storage optimizations. Here’s what’s new (and what’s coming):

### 1\. A cleaner API

We renamed the index from “inverted” to “text” and reworked the syntax to be more intuitive.

You can now pass parameters as key-value pairs directly in the INDEX clause. This makes the index both easier to use and more flexible.

```sql

CREATE TABLE tab

(

[...],

documents String,

INDEX document_index(documents) TYPE text(tokenizer = 'default')

)

ENGINE = MergeTree

ORDER BY [...];

```

This creates a text index using the default tokenizer (which splits text by non-alphanumeric characters). You can also specify alternate tokenizers like 'ngram', 'split', and 'no\_op', and provide additional settings like the n-gram size.

### 2\. New split tokenizer

We’ve added a new tokenizer type called **split** that is designed for semi-structured text such as logs or CSV-style data.

Unlike the default tokenizer, which tries to identify words based on general rules, the split tokenizer **only** breaks text at specific separators you define (by default: `,`, `;`, , `\n`, and `\\`).

This makes it ideal for:

- CSV-style input

- Log messages with consistent delimiters

- Any scenario where you want precise control over token boundaries

#### Example: CSV-style input

Let’s compare the default tokenizer with the new split tokenizer for a CSV-style string

```sql

SET allow_experimental_full_text_index = 1;

CREATE TABLE tab_csv_default (

id Int64,

str String,

INDEX idx_str(str) TYPE text(tokenizer = 'default')

)

ENGINE = MergeTree()

ORDER BY id;

CREATE TABLE tab_csv_split (

id Int64,

str String,

INDEX idx_str(str) TYPE text(tokenizer = 'split', separators = [',', ';', '